How to Optimize Token Usage and Save Money on AI APIs

As AI APIs become more integral to applications and workflows, managing token usage has become a critical skill. This guide will show you practical strategies to optimize your token consumption and reduce costs without sacrificing quality.

Why Token Optimization Matters

Most AI providers like OpenAI, Anthropic, and Google charge based on token usage. Reducing your token consumption can lead to significant cost savings, especially at scale. Beyond cost, optimization can also improve response times and allow you to fit more context into limited windows.

Cost Comparison

For a typical application making 10,000 API calls per day:

- Unoptimized: 2,000 tokens per call = 20M tokens/day = ~$400/day (GPT-4)

- Optimized: 500 tokens per call = 5M tokens/day = ~$100/day (GPT-4)

- Monthly savings: ~$9,000

Key Optimization Strategies

1. Be Concise in Your Prompts

Every word in your prompt costs tokens. Remove unnecessary explanations, examples, and verbosity.

❌ Inefficient

"I would like you to analyze the following text and provide me with a comprehensive summary that captures all the main points and key details. Please make sure to be thorough and include all important information."

~35 tokens

✅ Optimized

"Summarize this text, including main points:"

~8 tokens

2. Use System Messages Effectively

For APIs that support system messages (like OpenAI), use them to set context and instructions instead of including them in every user message. System messages are still counted in your token usage, but you only need to send them once per conversation.

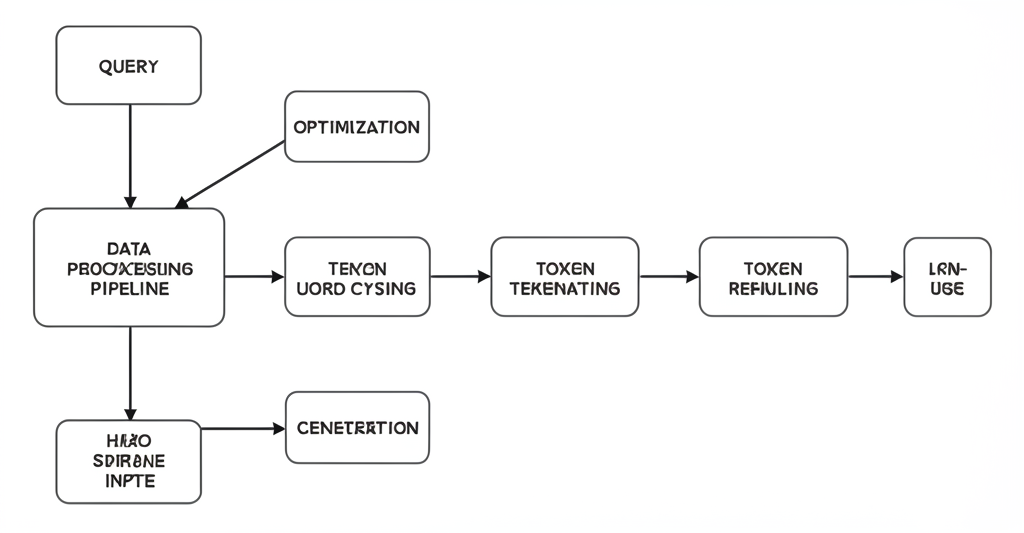

3. Compress and Preprocess Input Data

Before sending data to an LLM:

- Remove redundant information

- Summarize long texts

- Extract only relevant sections

- Convert verbose formats (like HTML) to more concise ones

4. Use Chunking for Large Documents

When working with large documents, split them into smaller chunks and process each chunk separately. This approach allows you to:

- Stay within context window limits

- Process only what you need

- Parallelize processing for faster results

Chunking Example

// JavaScript example of document chunking

function chunkDocument(text, maxChunkSize = 1000) {

const paragraphs = text.split('\n\n');

const chunks = [];

let currentChunk = '';

for (const paragraph of paragraphs) {

// If adding this paragraph would exceed the chunk size

if (currentChunk.length + paragraph.length > maxChunkSize && currentChunk.length > 0) {

chunks.push(currentChunk);

currentChunk = paragraph;

} else {

currentChunk += (currentChunk ? '\n\n' : '') + paragraph;

}

}

if (currentChunk) chunks.push(currentChunk);

return chunks;

}5. Cache Responses

Implement caching for common or repetitive queries. This reduces the need to make the same API calls multiple times.

6. Use the Right Model for the Task

Not every task requires the most advanced (and expensive) models:

- Use smaller models for simpler tasks

- Reserve larger models for complex reasoning

- Consider fine-tuned models for specific, repetitive tasks

Measuring the Impact of Optimization

To ensure your optimization efforts are effective, track these metrics:

- Token usage per request: Before and after optimization

- Cost per operation: Calculate the actual cost savings

- Quality of outputs: Ensure optimization doesn't reduce quality

- Response time: Smaller prompts often lead to faster responses

Ready to optimize your token usage?

Use our free token counter to measure your prompt and response sizes across different tokenization methods.

Conclusion

Token optimization is both an art and a science. By implementing these strategies, you can significantly reduce your AI API costs while maintaining or even improving the quality of your results. Start by measuring your current usage, then apply these techniques incrementally to see what works best for your specific use case.

Remember that the field of AI is rapidly evolving, so stay updated on new models and pricing structures to continuously refine your optimization strategy.